At the frontlines in the battle for SEO is Google Search Console (GSC), an amazing tool that makes you visible in search engine results pages (SERPs) and provides an in-depth analysis of web traffic being routing to your doorstep. And it does all this for free.

If your website marks your presence in cyberspace, GSC boosts viewership and increases traffic, conversions, and sales. In this guide, SEO strategists at Miromind explain how you benefit from GSC, how you integrate it with your website, and what you do with its reports to strategize the domain dominance of your brand.

What is Google Search Console (GSC)?

Created by Google, the Google Webmaster Tools (GWT) initially targeted webmasters. Offered by Google as a free of cost service, GWT metamorphosed into its present form, the Google Search Console (GSC). It’s the cutting edge tool widely used by an exponentially diversifying group of digital marketing professionals, web designers, app developers, SEO specialists, and business entrepreneurs.

For the uninitiated, GSC tells you everything that you wish to know about your website and the people who visit it daily. For example, how much web traffic you’re attracting, what are people searching for in your site, the kind of platform (mobile, app, desktop) people are using to find you, and more importantly, what makes your site popular.

Then GSC takes you on a subterranean dive to find and fix errors, design sitemaps, and check file integrity.

Precisely what does Google Search Console do for you? These are the benefits.

1. Search engine visibility improves

Ever experienced the sinking sensation of having done everything demanded of you for creating a great website, but people who matter can’t locate you in a simple search? Search Console makes Google aware that you’re online.

2. The virtual image remains current and updated

When you’ve fixed broken links and coding issues, Search Console helps you update the changes in such a manner that Google’s search carries an accurate snapshot of your site minus its flaws.

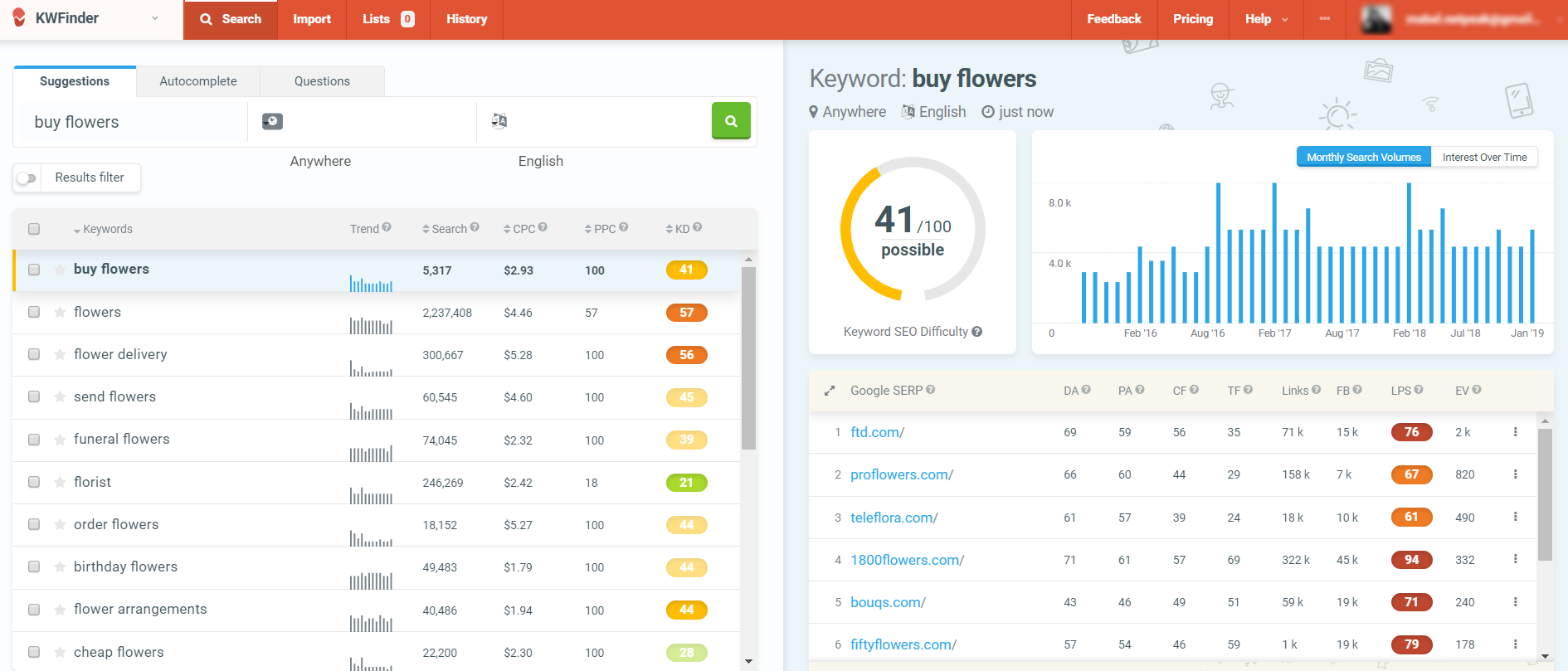

3. Keywords are better optimized to attract traffic

Wouldn’t you agree that knowing what draws people to your website can help you shape a better user experience? Search Console opens a window to the keywords and key phrases that people frequently use to access your site. Armed with this knowledge, you can optimize the site to respond better to specific keywords.

4. Safety from cyber threats

Can you expect to grow business without adequate protection against external threats? Search Console helps you build efficient defenses against malware and spam, securing your growing business against cyber threats.

5. Content figures prominently in rich results

It’s not enough to merely figure in a search result. How effectively are your pages making it into Google rich results? These are the cards and snippets that carry tons of information like ratings, reviews, and just about any information that results in better user experience for people searching for you. Search console gives you a status report on how your content is figuring in rich results so you can remedy a deficit if detected.

6. Site becomes better equipped for AMP compliance

You’re probably aware that mobile friendliness has become a search engine ranking parameter. This means that the faster your pages load, the more user-friendly you’re deemed to be. The solution is to adopt accelerated mobile pages (AMP), and Search Console helpfully flags you out in the areas where you’re not compliant.

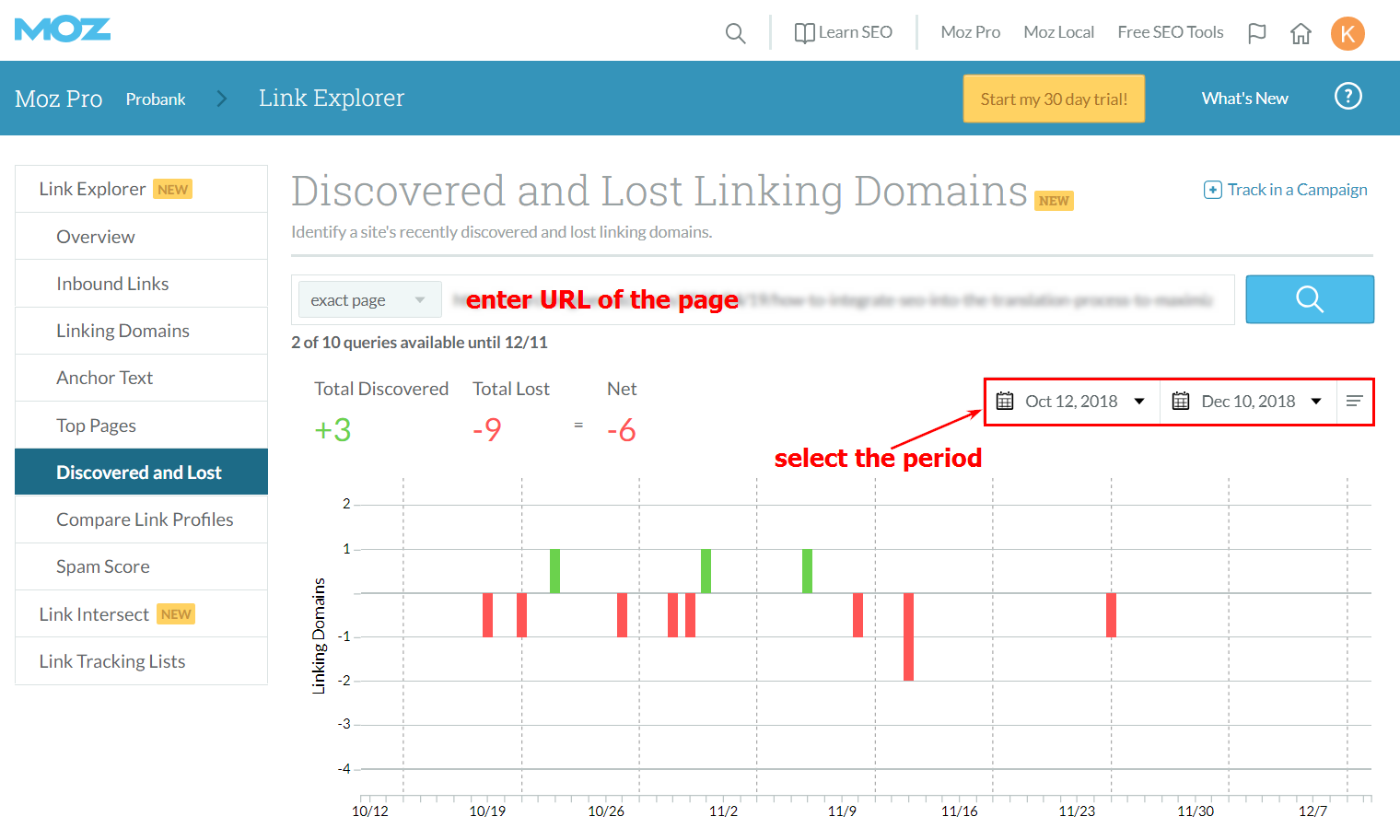

7. Backlink analysis

The backlinks, the websites that are linking back to your website give Google an indication of the popularity of your site; how worthy you are of citation. With Search Console, you get an overview of all the websites linking to you, and you get a deeper insight into what motivates and sustains your popularity.

8. The site becomes faster and more responsive to mobile users

If searchers are abandoning your website because of slow loading speeds or any other glitch, Search Console alerts you so you can take remedial steps and become mobile-friendly.

9. Google indexing keeps pace with real-time website changes

Significant changes that you make on the website could take weeks or months to figure in the Google Search Index if you sit tight and do nothing. With search console, you can edit, change, and modify your website endlessly, and ensure the changes are indexed by Google instantaneously. By now you have a pretty good idea why Google Search Console has become the must-have tool for optimizing your website pages for improved search results. This also helps ensure that your business grows in tandem with the traffic that you’re attracting and converting.

Your eight step guide on how to use Google Search Console

1. How to set up your unique Google Search Console account

Assuming that you’re entirely new to GSC, your immediate priority is to add the tool and get your site verified by Google. By doing this, you’ll be ensuring that Google classifies you unambiguously as the owner of the site, whether you’re a webmaster, or merely an authorized user.

This simple precaution is necessary because you’ll be privy to an incredibly rich source of information that Google wouldn’t like unauthorized users to have access to.

You can use your existing Google account (or create a new one) to access Google Search Console. It helps if you’re already using Google Analytics because the same details can be used to login to GSC. Your next step is to open the console and click on “Add property”.

By adding your website URL into the adjacent box, you get an umbilical connection to the console so you can start using its incredible array of features. Take care to add the prefix “https” or “www” so Google loads the right data.

2. How to enable Google to verify your site ownership

Option one

How to add an HTML tag to help Google verify ownership

Once you have established your presence, Google will want to verify your site. At this stage, it helps to have some experience of working in HTML. It’ll be easier to handle the files you’re uploading; you’ll have a better appreciation of how the website’s size influences the Google crawl rate, and gain a clearer understanding of the Google programs already running on your website.

If all this sounds like rocket science, don’t fret because we’ll be hand-holding you through the process.

Your next step is to open your homepage code and paste the search console provided HTML tag within the

section of your site’s HTML code.

The newly pasted code can coexist with any other code in the

section; it’s of no consequence.

An issue arises if you don’t see the

section, in which case you’ll need to create the section to embed the Search Console generated code so that Google can verify your site.

Save your work and come back to the homepage to view the source code; the console verification code should be clearly visible in the

section confirming that you have done the embedding correctly.

Your next step is to navigate back to the console dashboard and click “Verify”.

At this stage, you’ll see either of two messages – A screen confirming that Google has verified the site, or pop up listing onsite errors that need to be rectified before completing verification. By following these steps, Google will be confirming your ownership of the site. It’s important to remember that once the Google Search Console code has been embedded onsite and verified, any attempt to tamper or remove the code will have the effect of undoing all the good work, leaving your site in limbo.

Share this article

Related articles

Getting Google Search Console to verify a WordPress website using HTML tag

Even if you have a WordPress site, there’s no escape from the verification protocol if you want to link the site to reap the benefits of GSC.

Assuming that you’ve come through the stage of adding your site to GSC as a new property, this is what you do.

The WordPress SEO plugin by Yoast is widely acknowledged to be an awesome SEO solution tailor-made for WordPress websites. Installing and activating the plugin gives you a conduit to the Google Search Console.

Once Yoast is activated, open the Google Search Console verification page, and click the “Alternate methods” tab to get to the HTML tag.

You’ll see a central box highlighting a meta tag with certain instructions appearing above the box. Ignore these instructions, select and copy only the code located at the end of the thread (and not the whole thread).

Now revert back to the website homepage and click through SEO>Dashboard. In the new screen, on clicking “Webmaster tools” you open the “Webmaster tools verification” window. The window displays three boxes; ensure to paste the previously copied HTML code into the Google Search Console box, and save the changes.

Now, all you have to do is revert to the Google Search Console and click “Verify” upon which the console will confirm that verification is a success. You are now ready to use GSC on your WordPress site.

Option two

How to upload an HTML file to help Google verify ownership

This is your second verification option. Once you’re in Google Search Console, proceed from “Manage site” to “Verify this site” to locate the “HTML file upload” option. If you don’t find the option under the recommended method, try the “Other verification methods”.

Once you’re there, you’ll be prompted to download an HTML file which must be uploaded in its specified location. If you change the file in any manner, Search Console won’t be able to verify the site, so take care to maintain the integrity of the download.

Once the HTML file is loaded, revert back to the console panel to verify, and once that is accomplished you’ll get a message confirming that the site is verified. After the HTML file has been uploaded, go back to Search Console and click “Verify”.

If everything has been uploaded correctly, you will see a page letting you know that the site has been verified.

Once again, as in the first option we’ve listed, don’t change, modify, or delete the HTML file as that’ll bring the site back to the unverified status.

Option three

Using the Google Tag Manager route for site verification

Before you venture into the Google Search Console, you might find it useful to get the hang of Google Tag Manager (GTM). It’s a free tool that helps you manage and maneuver marketing and analytics tags on your website or app.

You’ll observe that GTM doubles up as a useful tool to simplify site verification for Google Search Console. If you intend to use GTM for site verification there are two precautions you need to take; open your GTM account and enable the “View, Edit, and Manage” mode.

Also, ensure that the GTM code figures adjacent to the

tag in your HTML code.

Once you’re done with these simple steps, revert back to GSC and follow this route – Manage site > Verify this site > Google Tag Manager. By clicking the “Verify” option in Google Tag Manager, you should get a message indicating that the site has been verified.

Once again, as in the previous options, never attempt to change the character of the GTM code on your site as that may bring the site back to its unverified position.

Option four

Securing your status as the domain name provider

Once you’re done with the HTML file tagging or uploading, Google will prompt you to verify the domain that you’ve purchased or the server where your domain is hosted, if only to prove that you are the absolute owner of the domain, and all its subdomains or directories.

Open the Search Console dashboard and zero in on the “Verify this site” option under “Manage site”.

You should be able to locate the “Domain name provider” option either under the “Recommended method” or the “Alternate method” tab. When you are positioned in the “Domain name provider”, you’ll be shown a listing of domain hosting sites that Google provides for easy reference.

At this stage, you have two options.

If your host doesn’t show up in the list, click the “Other” tab to receive guidelines on creating a DNS TXT code aimed at your domain provider. In some instances, the DNS TXT code may not match your provider. If that mirrors your dilemma, create a DNS TXT record or CNAME code that will be customized for your provider.

3. Integrating the Google Analytics code on your site

If you’re new to Google Analytics (GA), this is a good time to get to know this free tool. It gives you amazing feedback which adds teeth to digital marketing campaigns.

At a glance, GA helps you gather and analyze key website parameters that affect your business. It tracks the number of visitors converging on your domain, the time they spend browsing your pages, and the specific keywords in your site that are most popular with incoming traffic.

Most of all, GA gives you a fairly comprehensive idea of how efficiently your sales funnel is attracting leads and converting customers. The first thing you need to do is to verify whether the website has the GA tracker code inserted in the

segment in the homepage HTML code. If the GA code is to carry out its tracking functions correctly, you have to ensure that the code is placed only in the segment and not elsewhere as in the segment.

Back in the Google Search Console, follow the given path – Manage site > Verify this site till you come to the “Google Analytics tracking code” and follow the guidelines that are displayed. Once you get an acknowledgment that the GA code is verified, refrain from making any changes to the code to prevent the site from reverting to unverified status.

Google Analytics vs. Google Search Console – Knowing the difference and appreciating the benefits

For a newbie, both Google Analytics and Google Search Console appear like they’re focused on the same tasks and selling the same pitch, but nothing could be further from the truth.

Read also: An SEO’s guide to Google Analytics

GA’s unrelenting focus is on the traffic that your site is attracting. GA tells you how many people visit your site, the kind of platform or app they’re using to reach you, the geographical source of the incoming traffic, how much time each visitor spends browsing what you offer, and which are the most searched keywords on your site.

If GA gives you an in-depth analysis of the efficiency (or otherwise) of your marketing campaigns and customer conversion pitch. Google Search Console then peeps under the hood of your website to show you how technically sound you are in meeting the challenges of the internet.

GSC is active in providing insider information.

- Are there issues blocking the Google search bot from crawling?

- Are website modifications being instantly indexed?

- Who links to you and which are your top-linked pages?

- Is there malware or some other cyber threat that needs to be quarantined and neutralized?

- Is your keyword strategy optimized to fulfill searcher intent?

GSC also opens a window to manual actions, if any, issued against your site by Google for perceived non-compliance of the Webmaster guidelines.

If you open the manual actions report in the Search Console message center and see a green check mark, consider yourself safe. But if there’s a listing of non-compliances, you’ll need to fix either the individual pages or sometimes the whole website and place the matter before Google for a review.

Manual actions must be looked into because failure to respond places your pages in danger of being omitted from Google’s search results. Sometimes, your site may attract manual action for no fault of yours, like a spammy backlink that violates Webmaster quality guidelines, and which you can’t remove.

In such instances, you can use the GSC “Disavow Tool” to upload a text file, listing the affected URLs, using the disavow links tool page in the console.

If approved, Google will recrawl the site and reprocess the search results pages to reflect the change. Basically, GA is more invested in the kind of traffic that you’re attracting and converting, while GSC shows you how technically accomplished your site is in responding to searches, and in defining the quality of user experience.

Packing power and performance by combining Google Analytics and Google Search Console

You could follow the option of treating GA and GSC as two distinct sources of information and analyze the reports you access, and the world would still go on turning.

But it may be pertinent to remember that both tools present information in vastly different formats even in areas where they overlap. It follows that integrating both tools presents you with additional analytical reports that you’d otherwise be missing; reports that trudge the extra mile in giving you the kind of design and marketing inputs that lay the perfect foundation for great marketing strategies.

Assuming you’re convinced of the need for combining GA and GSC, this is what you do.

Open the Google Search Console, navigate to the hub-wheel icon, and click the “Google Analytics Property” tab.

This shows you a listing of all the GA accounts that are operational in the Google account.

Hit the save button on all the accounts that you’ll be focusing on, and with that small step, you’re primed to extract maximum juice from the excellent analytical reporting of the GA-GSC combo.

Just remember to carry out this step only after the website has been verified by Google by following the steps we had outlined earlier.

What should you do with Google Search Console?

1. How to create and submit a sitemap to Google Search Console

Is it practical to hand over the keys to your home (website) to Google and expect Google to navigate the rooms (webpages) without assistance?

You can help Google bots do a better job of crawling the site by submitting the site’s navigational blueprint or sitemap.

The sitemap is your way of showing Google how information is organized throughout your webpages. You can also position valuable details in the metadata, information on textual content, images, videos, and podcasts, and even mention the frequency with which the page is updated.

We’re not implying that a sitemap is mandatory for Google Search Console, and you’re not going to be penalized if you don’t submit the sitemap.

But it is in your interests to ensure that Google has access to all the information it needs to do its job and improve your visibility in search engines, and the sitemap makes the job easier. Ultimately, it works in your favor when you’re submitting a sitemap for an extensive website with many pages and subcategories.

For starters, decide which web pages you want Google bots should crawl, and then specify the canonical version of each page.

What this means is that you’re telling Google to crawl the original version of any page to the exclusion of all other versions.

Then create a sitemap either manually or using a third-party tool.

At this stage, you have the option of adding the sitemap to the robots.txt file in your source code or link it directly to the search console.

Read also: Robots.txt best practice guide + examples

Assuming that you’ve taken the trouble to get the site verified by GSC, revert back to the search console, and then navigate to “Crawl” and its subcategory “Sitemaps.”

On clicking “Sitemaps” you will see a field “Add a new sitemap”. Enter the URL of your sitemap in a .xml format and then click “Submit”.

With these simple steps, you’ve effectively submitted your sitemap to Google Search Console.

2. How to modify your robots.txt file so search engine bots can crawl efficiently

There’s a file embedded in your website that doesn’t figure too frequently in SEO optimization circles. The minor tweaking of this file has major SEO boosting potential. It’s virtually a can of high-potency SEO juice that a lot of people ignore and very few open.

It’s called the robots exclusion protocol or standard. If that freaks you out, we’ll keep it simple and call it the robots.txt file.

Even without technical expertise, you can open your source code and you’ll find this file.

The robots.txt is your website’s point of contact with search engine bots.

Before tuning in on your webpages, the search bot will peep into this text file to see if there are any instructions about which pages should be crawled and which pages can be ignored (that’s why it helps to have your sitemap stored here).

The bot will follow the robots exclusion protocol that your file suggests regarding which pages are allowed for crawling and which are disallowed. This is your site’s way of guiding search engines to pages that you wish to highlight and also helps in exclusion of content that you do not want to share.

There’s no guarantee that robots.txt instructions will be followed by bots, because bots designed for specific jobs may react differently to the same set of instructions. Also, the system doesn’t block other websites from linking to your content even if you wouldn’t want the content indexed.

Before proceeding further, please ensure that you’ve already verified the site; then open the GSC dashboard and click the “Crawl” tab to proceed to “robots.txt Tester.”

This tool enables you to do three things:

- Peep into the robots.txt file to see which actions are currently allowed or disallowed

- Check if there are any crawl errors in the past 90 days

- Make changes to suit your desired mode of interacting with search bots

Once you’ve made necessary changes, it’s vital that the robots.txt file in your source code reflects those changes immediately.

To do that, shortly after making changes, click the “Submit” tag below the editing box in the search console and proceed to upload the changed file to update the source code. Your root directory should then appear as www.yourwebsite.com/robots.txt.

To confirm that you’ve completed the mission, go back to the search console’s robots.txt testing tool and click “Verify live version” following which you should get a message verifying the modification.

3. How to use the “Fetch as Google” option to update regular website changes

On-page content and title tags undergo regular changes in the website’s life cycle, and it’s a chore to manually get these changes recorded and updated in the Google search engine. Fortunately, GSC comes up with a solution.

Once you’ve located the page that needs a change or update, open the search console, go to the “Crawl” option and zero in on the “Fetch as Google” option. You’ll see a blank URL box in the center.

Enter the modified page in the box to look like this; http://yourwebsite.com/specificcategory, then click “Fetch and Render.”

After completing this step go to the “Request indexing” button and consider the options before you.

What you have just done is to authorize the Google bot to index all the changes that you’ve put through, and within a couple of days, the changes become visible in the Google search results.

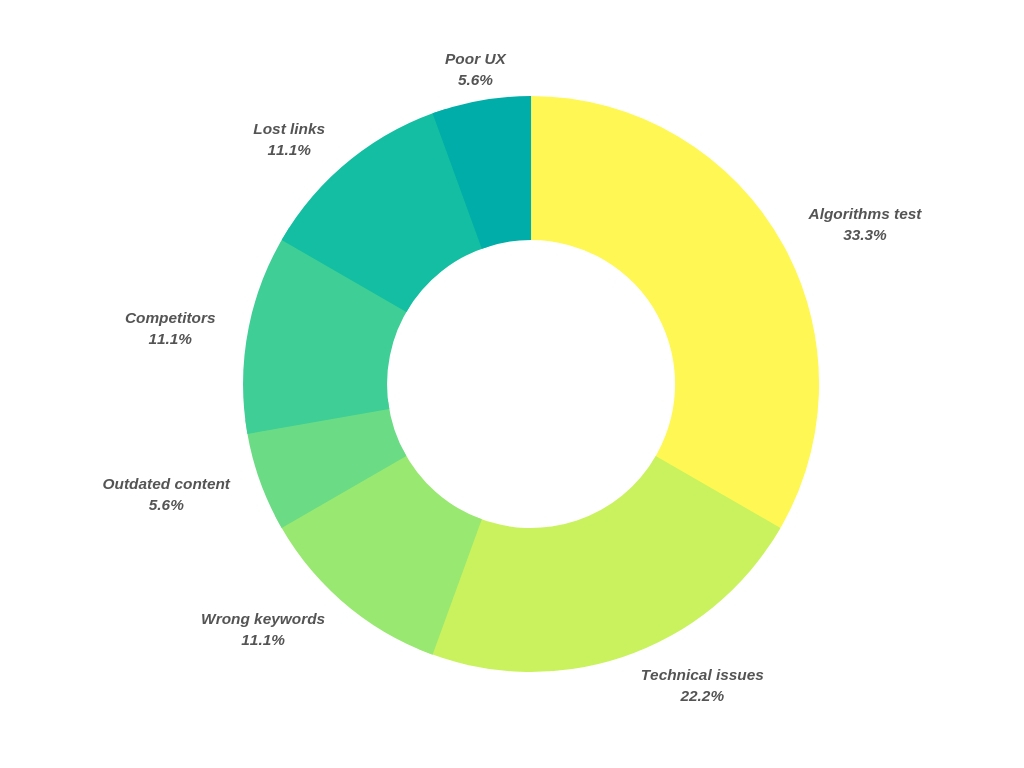

4. How to use Google Search Console to identify and locate site errors

A site error is a technical malfunction which prevents Google search bots from indexing your site correctly.

Naturally, when your site is wrongly configured or slowing down, you are creating a barrier between the site and search engines. This blocks content from figuring in top search results.

Even if you suspect that something is wrong with your site, you can’t lose time waiting for the error to show up when it’s too late, because the error would have done the damage by then.

So, you turn to Google Search Console for instant troubleshooting. With GSC, you get a tool that keeps you notified on errors that creep into your website.

When you’ve opened the Google Search Console, you’ll see the “Crawl” tab appearing on the left side of the screen. Click the tab and open “Crawl errors”.

What you see now is a listing of all the page errors that Google bots encountered while they were busy indexing the site. The pop up will tell you when the page was last crawled and when the first error was detected, followed by a brief description of the error.

Once the error is identified, you can handover the problem for rectification to your in-house webmaster.

When you click the “Crawl” tab, you’ll find “Crawl stats.” This is your gateway to loads of statistically significant graphs that show you all the pages that were crawled in the previous 90 days, the kilobytes downloaded during this period, and precisely how much time it took for Google to access and download a page. These stats give a fair indication of your website’s speed and user-friendliness.

Conclusion

A virtual galaxy of webmasters, SEO specialists, and digital marketing honchos would give their right arm and left leg for tools that empower SEO and bring in more customers that’ll ring the cash registers. Tools with all the bells and whistles are within reach, but many of them will make you pay hefty fees to access benefits.

But here’s a tool that’s within easy reach, a tool that promises high and delivers true to expectations without costing you a dollar, and paradoxically, very few people use it.

GSC is every designer’s dream come true, every SEO expert’s plan B, every digital marketer’s Holy Grail when it comes to SEO and the art of website maintenance.

What you gain using GSC are invaluable insights useful in propelling effective organic SEO strategies, and a tool that packs a punch when used in conjunction with Google Analytics.

When you fire the double-barreled gun of Google Analytics and Google Search Console, you can aim for higher search engine rankings and boost traffic to your site, traffic that converts to paying customers.

Dmitriy Shelepin is an SEO expert and co-founder of Miromind.

Want to stay on top of the latest search trends?

Get top insights and news from our search experts.

Related reading

Why do “lower authority” sites beat out higher ones for featured snippets? Why are images sometimes from other sites? What kind of content ranks?

According to Google, 53 percent of mobile site visitors leave a page that takes longer than three seconds to load. Five ways to make them stay and convert.

The link building industry is flooded with many myths that’ll never get you results but can get you into a lot of trouble. Nine myths debunked.

It’s not the actual social activity that matters, but what happens as a result of that activity. Three ways to harness the power of social media for SEO.

Want to stay on top of the latest search trends?

Get top insights and news from our search experts.

This marketing news is not the copyright of Scott.Services – please click here to see the original source of this article. Author: Dmitriy Shelepin

For more SEO, PPC, internet marketing news please check out https://news.scott.services

Why not check out our SEO, PPC marketing services at https://www.scott.services

We’re also on:

https://www.facebook.com/scottdotservices/

https://twitter.com/scottdsmith

https://plus.google.com/112865305341039147737

The post Complete guide to Google Search Console appeared first on Scott.Services Online Marketing News.

source

https://news.scott.services/complete-guide-to-google-search-console/