Technical optimization is the core element of SEO. Technically optimized sites appeal both to search engines for being much easier to crawl and index, and to users for providing a great user experience.

It’s quite challenging to cover all the technical aspects of your site because hundreds of issues may need fixing. However, there are some areas that are extremely beneficial if got right. In this article, I will cover those you need to focus on first (plus actionable tips on how to succeed in them SEO-wise).

1. Indexing and crawlability

The first thing you want to ensure is that search engines can properly index and crawl your website. You can check the number of your site’s pages that are indexed by search engines in Google Search Console, by googling for site:domain.com or with the help of an SEO crawler like WebSite Auditor.

Source: SEO PowerSuite

In the example above, there’s an outrageous indexing gap, the number of pages indexed in Google is lagging behind the total number of pages. In order to avoid indexing gaps and improve the crawlability of your site, pay closer attention to the following issues:

Resources restricted from indexing

Remember, Google can now render all kinds of resources (HTML, CSS, and JavaScript). So if some of them are blocked from indexing, Google won’t see your pages the way they should look and won’t render them properly.

Orphan pages

These are the pages that exist on your site but are not linked to by any other page. It means they are invisible to search engines. Make sure your important pages haven’t become orphans.

Paginated content

Google has recently admitted they haven’t supported rel=next, rel-prev for quite some time and recommend going for a single-page content. Though you do not need to change anything in case you already have paginated content and it makes sense for your site, it’s advisable to make sure pagination pages can kind of stand on their own.

What to do

- Check your robots.txt file. It should not block important pages on your site.

- Double-check by crawling your site with a tool that can crawl and render all kinds of resources and find all pages.

2. Crawl budget

Crawl budget can be defined as the number of visits from a search engine bot to a site during a particular period of time. For example, if Googlebot visits your site 2.5K times per month, then 2.5K is your monthly crawl budget for Google. Though it’s not quite clear how Google assigns crawl budget to each site, there are two major theories stating that the key factors are:

- Number of internal links to a page

- Number of backlinks

Back in 2016, my team ran an experiment to check the correlation between both internal and external links and crawl stats. We created projects for 11 sites in WebSite Auditor to check the number of internal links. Next, we created projects for the same 11 sites in SEO SpyGlass to check the number of external links pointing to every page.

Then we checked the crawl statistics in the server logs to understand how often Googlebot visits each page. Using this data, we found the correlation between internal links and crawl budget to be very weak (0.154), and the correlation between external links and crawl budget to be very strong (0.978).

However, these results seem to be no longer relevant. We re-ran the same experiment last week to prove there’s no correlation between both backlinks and internal links and the crawl budget. In other words, backlinks used to play a role in increasing your crawl budget, but it doesn’t seem to be the case anymore. It means that to amplify your crawl budget, you need to use good old techniques that will make search engine spiders crawl as many pages of your site as possible and find your new content quicker.

What to do

- Make sure important pages are crawlable. Check your robots.txt, it shouldn’t block any important resources (including CSS and JavaScript).

- Avoid long redirect chains. The best practice here, no more than two redirects in a row.

- Fix broken pages. If a search bot stumbles upon a page with a 4XX/5XX status code ( 404 “not found” error, 500 “internal server” error, or any other similar error), one unit of your crawl budget goes to waste.

- Clean up your sitemap. To make your content easier to find for crawlers and users, remove 4xx pages, unnecessary redirects, non-canonical, and blocked pages.

- Disallow pages with no SEO value. Create a disallow rule for the privacy policy, old promotions, terms, and conditions, in the robots.txt file.

- Maintain internal linking efficiency. Make your site structure tree-like and shallow so that crawlers could easily access all important pages on your site.

- Cater to your URL parameters. If you have dynamic URLs leading to the same page, specify their parameters in Google Search Console > Crawl > Search Parameters.

3. Site structure

Intuitive sites feel like a piece of art. However, beyond this feeling, there is a well-thought site structure and navigation that helps users effortlessly find what they want. What’s more, creating an efficient site architecture helps bots access all the important pages on your site. To make your site structure work, focus on two crucial factors:

1. Sitemap

With the help of a sitemap, search engines find your site, read its structure, and discover fresh content.

What to do

If for some reason you don’t have a sitemap, it’s really necessary to create it and upload to Google Search Console. You can check whether it’s coded properly with the help of the W3C validator.

Keep your sitemap:

- Updated – Make changes to it when you add or remove something from the site;

- Concise – Which is under 50,000 URLs;

- Clean – Free from errors, redirects, and blocked resources.

2. Internal linking structure

Everyone knows about the benefits of external links, but most don’t pay much attention to internal links. However, savvy internal linking helps spread link juice among all pages efficiently and give a traffic boost to pages with less authority. What’s more, you can create topic clusters by interlinking related content within your site to show search engines your site’s content has high authority in a particular field.

What to do

The strategies may vary depending on your goals, but these elements are critical for any goal:

Shallow click-depth: John Mueller confirmed that the fewer clicks it takes to get to a page from your homepage, the better. Following this advice, try to keep each page up to 3 clicks away from the homepage. If you have a large site, use breadcrumbs or the internal site search.

Use of contextual links: When you create content for your site, remember including links to your pages with related content (articles, product pages, etc.) Such links usually have more SEO weight than navigational ones (those in headers or footers).

Informational anchor texts: Include keywords to the anchor texts of internal links so that they inform readers what to expect from linked content. Don’t forget to do the same for alt attributes for image links.

4. Page speed

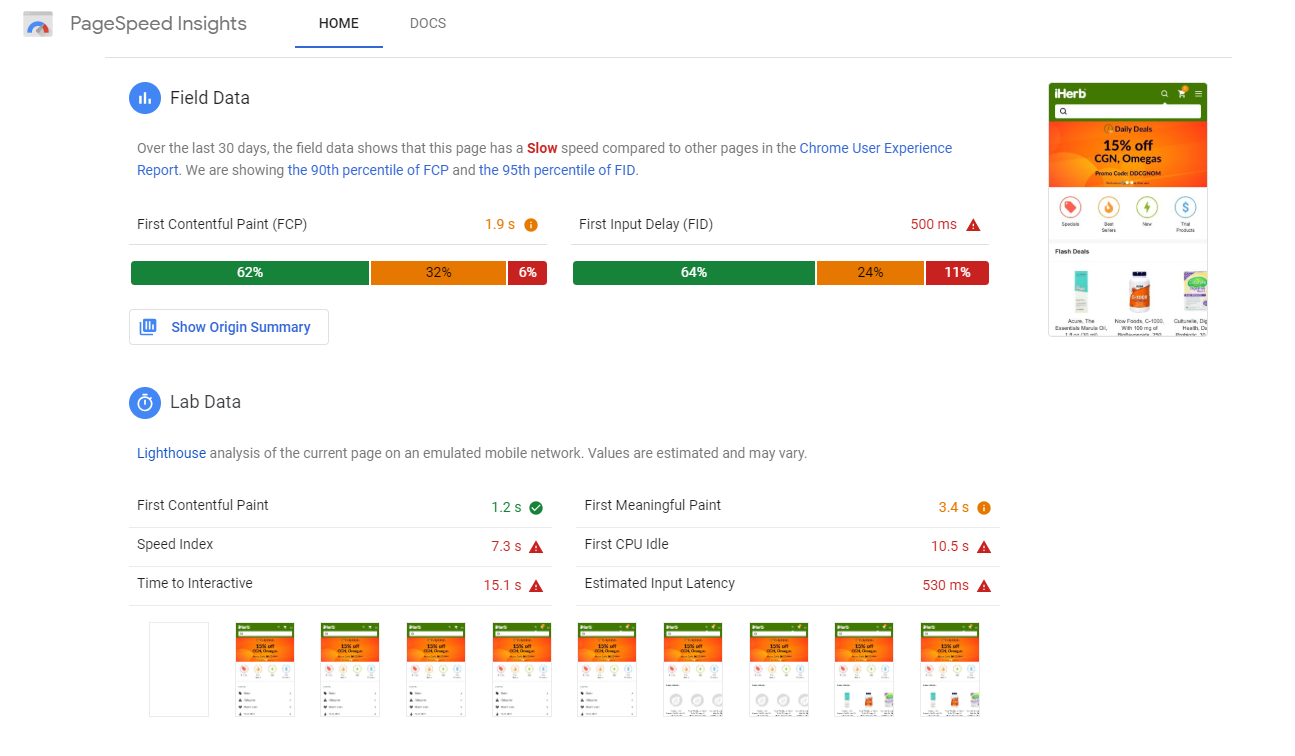

Speed is a critical factor for the Internet of today. A one-second delay can lead to a grave traffic drop for most businesses. No surprise then that Google is also into speed. Desktop page speed has been a Google ranking factor for quite a while. In July 2018, mobile page speed became a ranking factor as well. Prior to the update, Google launched a new version of its PageSpeed Insights tool where we saw that speed was measured differently. Besides technical optimization and lab data (basically, the way a site loads in ideal conditions), Google started to use field data like loading speed of real users taken from the Chrome User Experience report.

Source: PageSpeed Insights dashboard

What’s the catch? When field data is taken into account, your lightning-fast site may be considered slow if most of your users have a slow Internet connection or old devices. At that point in time, I got curious how page speed actually influenced mobile search positions of pages. As a result, my team and I ran an experiment (before and immediately after the update) to see whether there was any correlation between page speed and pages’ positions in mobile search results.

The experiment showed the following:

- No correlation between a mobile site’s position and the site’s speed (First Contentful Paint and DOM Content Loaded);

- High correlation between site’s position and its average Page Speed Optimization Score.

It means that for the time being, it’s the level of your site’s technical optimization that matters most for your rankings. Good news is, this metric is totally under your control. Google actually provides a list of optimization tips for speeding up your site. The list is as long as 22 factors, but you do not have to fix all of them. There are usually five to six that you need to pay attention to.

What to do

While you can read how to optimize for all 22 factors here, let’s view how to deal with those that can gravely slow down pages’ rendering:

Landing page redirects: Create a responsive site; choose a redirect type suitable for your needs (permanent 301, temporary 302, JavaScript, or HTTP redirects).

Uncompressed resources: Remove unneeded resources before compression, gziping all compressible resources, using different compression techniques for different resources, etc.

Long server response time: Analyze site performance data to detect what slows it down (use tools like WebPage Test, Pingdom, GTmetrix, Chrome Dev Tools).

Absence of caching policy: Introduce a caching policy according to Google recommendations.

Unminified resources: Use minification together with compression.

Heavy images: Serve responsive images and leverage optimization techniques, such as using vector formats, web fonts instead of encoding text in an image, removing metadata, etc.

5. Mobile-friendliness

As the number of mobile searchers was growing exponentially, Google wanted to address the majority of its users and rolled out mobile-first indexing at the beginning of 2018. By the end of 2018, Google was using mobile-first indexing for over half of the pages shown in search results. A mobile-first index means that now Google crawls the web from a mobile point of view: a site’s mobile version is used for indexing and ranking even for search results shown to desktop users. In case there’s no mobile version, Googlebot will simply crawl a desktop one. It’s true that neither mobile-friendliness nor a responsive design is a prerequisite for a site to be moved to the mobile-first index. Whatever version you have, your site will be moved to the index anyway. The trick here is that this version, as it is viewed by a mobile user agent, will determine how your site ranks in both mobile and desktop search results.

What to do

If you’ve been thinking to go responsive, now is the best moment to do it, according to Google’s Trends Analyst John Mueller.

Don’t be afraid of using expandable content on mobile, such as hamburger and accordion menus, tabs, expandable boxes, and more. However, say no to intrusive interstitials.

Test your pages for mobile-friendliness with a Google Mobile-Friendly Test tool. It evaluates the site according to various usability criteria, like viewport configuration, size of text and buttons, and use of plugins

– Run an audit for your mobile site by using a custom user agent in your SEO crawler to make sure all your important pages can be reached by search engine crawlers and are free from grave errors. Pay attention to titles, H1s, structured data, and others.

– Track mobile performance of your site in Google Search Console.

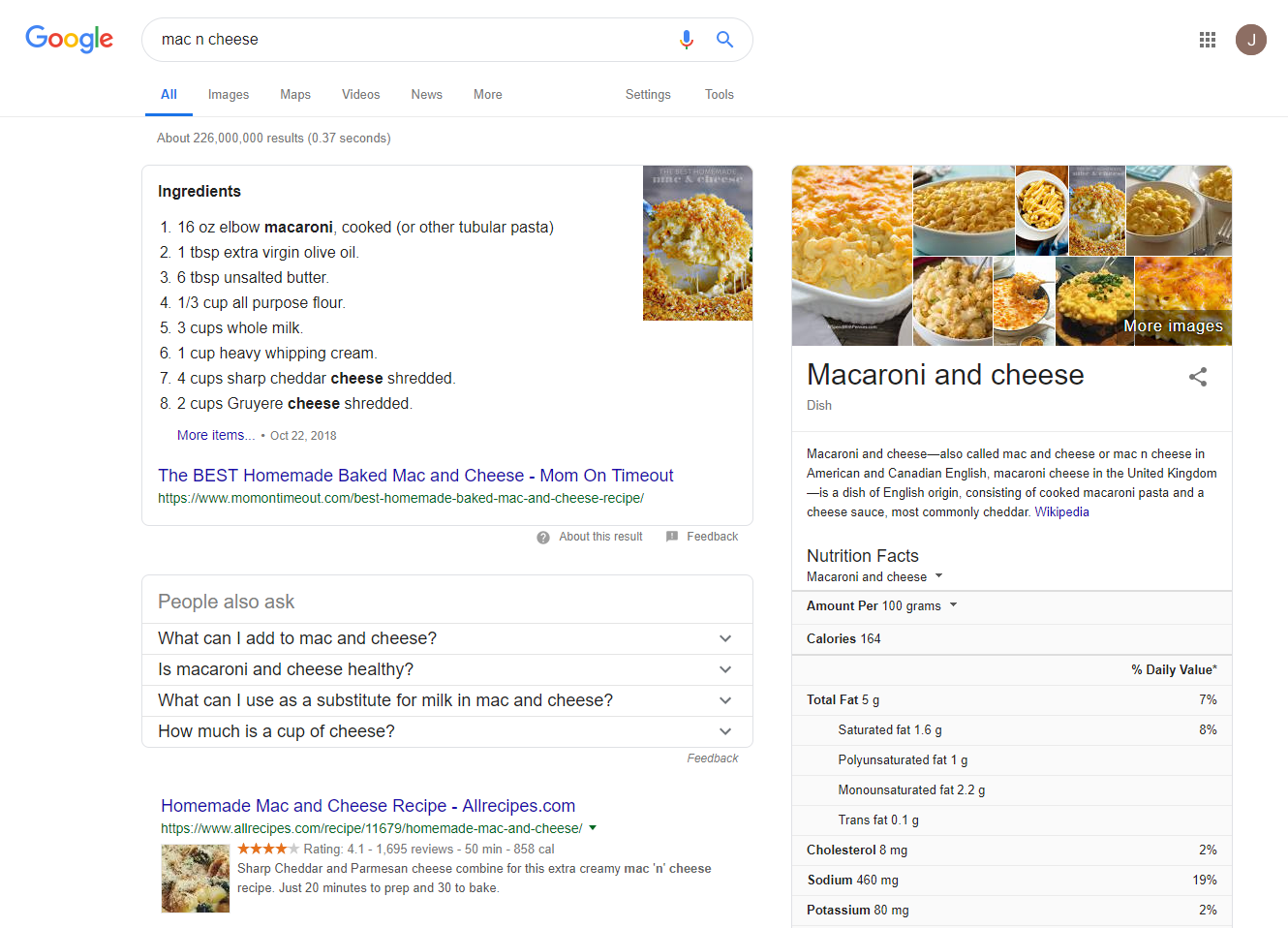

6. Structured data

As Google SERPs are being enhanced visually, users are becoming rather less prone to clicking. They try to get all they need right from the page of search results without even clicking on any page. And if they click, they go for a result that caught their attention. Rich results are those that usually have the benefit of being visible with image and video carousels, rating stars, and review snippets.

Rich results require structured data implementation. Structured data is coded within the page’s markup and provides information about its content. There are about 600 types of structured data available. While not all of them can make your results rich, it improves chances to get a rich snippet in Google. What’s more, it helps crawlers understand your content better in terms of categories and subcategories (for instance, book, answer, recipe, map) Still, there are about 30 different types of rich results that are powered by schema markup. Let’s see how to get them.

What to do

- Go to schema.org and choose those schemas suitable for content on your site. Assign those schemas to URLs.

- Create structured data markup. Don’t worry, you do not need developer skills to do that. Use Google’s Structured Data Markup Helper that will guide you through the process. Then test your markup in Structured Data Testing Tool or in its updated version, Rich Results Testing Tool. Keep in mind that Google supports structured data in 3 formats: JSON-LD, Microdata, and RDFa, with JSON-LD being the recommended one.

Don’t expect Google to display your enhanced results right away. It can take a few weeks, so use Fetch as Google in the search console to make your pages to be recrawled faster. Bear in mind that Google can decide not to show them at all if they do not meet the guidelines.

Don’t be surprised if you get a rich snippet without structured data implementation. John Mueller confirmed that sometimes your content is enough to produce rich results.

Summary

Technical SEO is something you cannot do without if you’d like to see your site rank higher in search results. While there are numerous technical elements that need your attention, the major areas to focus on for max ranking pay-off are loading speed, crawlability issues, site structure, and mobile-friendliness.

Aleh is the Founder and CMO at SEO PowerSuite and Awario. He can be found on Twitter at .

Related reading

I’ll walk you through nine types of meta description tags with screenshots and examples, showing you what works well and how they could do better.

When you face a drop in your rankings or traffic, it’s just the tip of an iceberg. Discover the possible causes of a sudden drop in traffic and their fixes.

GDS, a free dashboard-style reporting tool launched in June 2016 allows data import from over 100 third-party sources. Updates listed with screenshots.

This marketing news is not the copyright of Scott.Services – please click here to see the original source of this article. Author: Aleh Barysevich

For more SEO, PPC, internet marketing news please check out https://news.scott.services

Why not check out our SEO, PPC marketing services at https://www.scott.services

We’re also on:

https://www.facebook.com/scottdotservices/

https://twitter.com/scottdsmith

https://plus.google.com/112865305341039147737

The post How to master technical SEO: Six areas to attack now appeared first on Scott.Services Online Marketing News.

source https://news.scott.services/how-to-master-technical-seo-six-areas-to-attack-now/

No comments:

Post a Comment