It has been a challenging month for YouTube.

As we recently reported, fresh concerns over child safety on the service came to light back on 17th February.

In a video published to the site, vlogger Matt Watson details how the service is being exploited by paedophiles who were using comment sections under innocuous videos of children to leave sexually provocative messages, to communicate with each other, and to link out to child pornography.

Of course, journalists and news sites were quick to level criticism at YouTube. Many pointed out that this wasn’t the first time child safety on the service has been called into question. Others were critical that its methods for safeguarding children were too ‘whack-a-mole’ in their approach.

And then came the actions of the advertisers – with Nestle, AT&T and Epic Games (creator of Fortnite) all pulling their ads from the service.

So how has YouTube responded? Is it doing enough?

Memo directly sent to advertisers

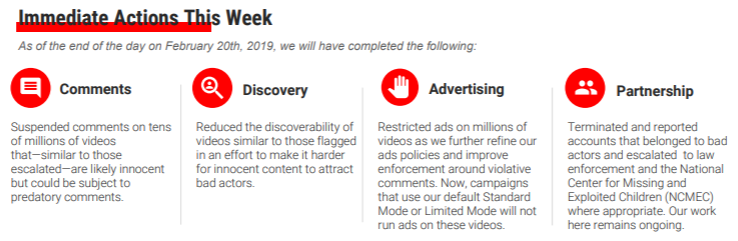

On 20th February YouTube sent out a memo to brands advertising on the service.

It detailed the ‘immediate actions’ it was taking to ensure children are safe in light of the recent allegations from Watson. These included suspending comments and reporting accounts to the NCMEC.

The memo reiterated that child safety is YouTube’s No. 1 priority, but also admitted there was more work to be done.

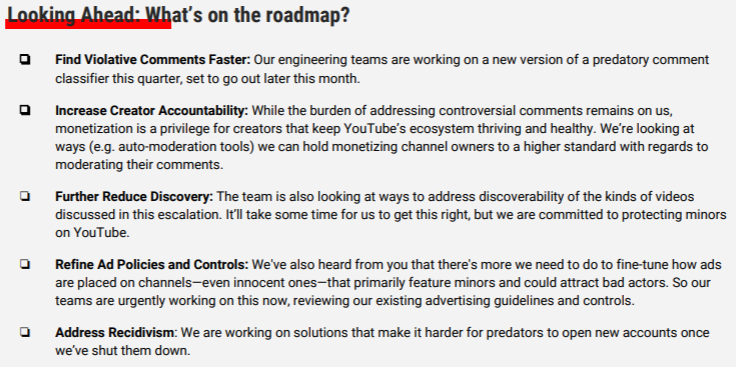

It laid out a roadmap of tweaks and improvements, including better improving the service’s ability to find predatory comments (set to be implemented this month) and potentially changing how ads are placed on channels.

Changes to Community Guidelines strikes system – are these related?

In a potentially related move, YouTube also announced via a recent blog post that it was going to make changes to its Community Guidelines.

The changes – which came into force on 25th February – include a warning for users the first time their content crosses the line.

YouTube says: ‘Although the content will be removed, there will be no other penalty on the channel. There will be only one warning and unlike strikes, the warning will not reset after 90 days.’

The ‘three strike’ system still exists but is stricter and more straightforward. Now a first strike results in a one-week freeze on the ability to upload any new content to the service. Previously, first strikes just resulted in a freeze on live-streaming.

A second strike in any 90-day period will result in a two-week freeze on the ability to upload any new content. Ultimately, a third strike in any 90-day period will result in channel termination.

That YouTube has taken this opportunity to address its creator community directly is interesting.

The Guardian has reported that the fallout from Watson’s video resulted in a number of prominent YouTube users criticizing him, rather than the service. Their reasoning was that it was overreactive and a deliberate attempt to drive advertisers away.

Additionally, a report at ABC News shed light on stories from creators who have been the victims of false claims and extortion attempts by bad actors who promise to remove strikes only after they’ve received payment via PayPal or BitCoin.

With this in mind, we can see that YouTube have been quite diplomatic in how they’ve rolled this Community Guideline change out. Imposing stricter penalties against a backdrop of better transparency and simpler rules is quite laudable.

Further questions over safety since

In the wake of Watson’s video, further news stories have emerged which relate directly and indirectly to child safety on YouTube.

On 24th February, pediatrician Free Hess exposed that some children’s videos available on YouTube Kids had hidden footage detailing how to commit suicide spliced into them (as reported at The Washington Post).

Additionally, on 25th February the BBC reported that the service was stopping adverts being shown on channels which showed anti-vaccination content.

And the past couple days, widespread internet concern has raged over “The Momo Challenge,” a supposed challenge encouraging minors to do dangerous / potentially self-harming acts.

However, this morning The Atlantic reported that this has been a digital hoax. And that it has followed similar cycles as the so-called Blue Whale challenge, teens eating toxic Tide Pods, and the cinnamon challenge — all of which were found to have no reported deaths/injuries associated.

And yesterday, YouTube tweeted this:

We want to clear something up regarding the Momo Challenge: We’ve seen no recent evidence of videos promoting the Momo Challenge on YouTube. Videos encouraging harmful and dangerous challenges are against our policies.

— YouTube (@YouTube) February 27, 2019

The company has also just updated their Creator Blog with a post titled, “More updates on our actions related to the safety of minors on YouTube.”

In it, they summarize “the main steps we’ve taken to improve child safety on YouTube since our update last Friday.”

These steps include:

- Disabling comments on videos featuring minors

- Launching a new comments classifier

- Taking action on creators who cause egregious harm to the community

It does seem that they are moving quickly to remedy the problems. But I think anyone would agree — they’ve had quite the month.

So the challenge is certainly ongoing…

All this does highlight the difficulty YouTube has in keeping all its millions of viewers, creators, and advertisers safe and happy.

We know the service is constantly updating its algorithm across its search function and its recommendations in order to give users better – more trustworthy – content.

We can also be quite sure that there has been a fair amount of activity in protecting minors on the service since 2017 when unsuitable content featuring Disney and Marvel characters was being found to be available on YouTube Kids. This timeframe is in line with the aforementioned memo which assures that the service has been working hard to improve in this regard for the past 18 months.

I’m not sure it’s entirely fair, then, to call YouTube’s approach to safeguarding children a ‘whack-a-mole approach’ or one which only sees the site take action when the instances gain media attention.

The sheer amount of content and users on the service is so massive, it depends on the community to produce the content and – at times – to monitor how it is used. In this instance, a user flagged an issue up and YouTube worked very quickly indeed. The service is always improving. But changes, tweaks, and improvements are not always newsworthy. The same can be said for Google.

Yes, there is more to be done. As online video continues to boom and the creator community continues to grow, we can expect issues to arise.

But I think it is unlikely that YouTube wouldn’t be proactive here. After all, its very existence depends on having great videos, trustworthy content, a safe community of users who are having a positive experience on the site, and an ecosystem where advertisers want to be.

Related reading

2018 was an eventful year for Google Ads, and 2019 looks to bring even more changes. Here are the key things to expect this year.

Interview with SEMrush CEO on where the company is headed, what they’re working on, pain points as an SEO, diversity in tech, and much more.

Most of our search activity is mobile now and most of our video viewing is too. How should this affect the way we approach SEO for our video content?

This marketing news is not the copyright of Scott.Services – please click here to see the original source of this article. Author: Luke Richards

For more SEO, PPC, internet marketing news please check out https://news.scott.services

Why not check out our SEO, PPC marketing services at https://www.scott.services

We’re also on:

https://www.facebook.com/scottdotservices/

https://twitter.com/scottdsmith

https://plus.google.com/112865305341039147737

The post YouTube + child safety: Is the service doing enough? appeared first on Scott.Services Online Marketing News.

source https://news.scott.services/youtube-child-safety-is-the-service-doing-enough/

No comments:

Post a Comment