The goal of this case study is to guide you with a step-by-step process to remove low-value pages from your site and the Google index.

Pages removal may have a disruptive effect on your site. If you choose to follow this process, you should understand the rationale behind each step and only implement changes if you have a deep knowledge of the website, SEO expertise and adequate resources.

The following case study is part of an overall SEO Audit and is the cornerstone of the SEO strategy.

During the content audit, a number of blog posts looked as if they did not bring any traffic, backlinks, conversions and little traction in social media. However, they helped to bring in new (offline) business partnerships with other companies, and so they had to be technically handled separately.

Page quality is a hot topic right now; this is because it is hard to define what quality content is. Is quality important to rank? Or to help a potential customer? Or to define your business? All of these questions need to be considered when hiring an agency or a consultant to create content.

It is possible that a website can rank highly without converting readers into leads or have high converting pages with low traffic. Often the top 10 pages are responsible for most of the traffic, and sometimes conversions.

Tasks Prioritization

The onboarding process is important with new clients. Prior website analysis, understanding the client (and their customers), resources, site performance, the competition, and the market, and goals define strategy. Usually, at this point, a list of implementable steps is given to clients, but it is also important to help them to prioritize.

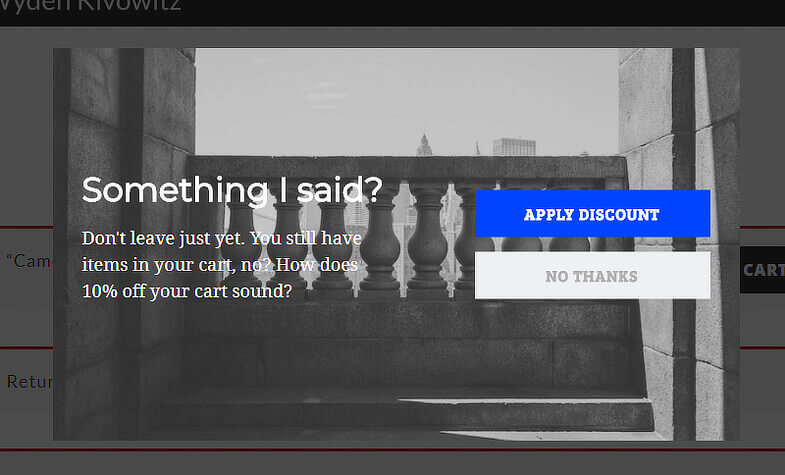

Case Study: A Slash Horror Show

A website with no previous SEO input. 99.97% of low-value website content needed to be eliminated.

The client had a double goal:

- Improving brand awareness against competitors.

- Increasing organic conversions (currently mainly via PPC).

The business sells historical reports for a category of used products. They run database queries and provide free reports. With the paid version, customers are provided all the information required to buy the best product.

Superficially the website looked successful. Further analysis revealed that it only ranked for a small set of keywords, within a narrow topic.

The several technical issues were prioritized into five urgent problems:

- Mixed http & https protocols, with www and non-www pages.

- A loop of redirects due to multiple site re-designs.

- Incorrect Canonical tags implementations.

- Thousands of Tag pages.

- Poor quality duplicate content representing the more than 90% of the page.

Mixed protocols, redirects and incorrect canonicals were resolved within weeks.

To fix the duplicate tag pages, the same method was utilized as the other millions of URLs which is outlined below.

The duplication issue was because there was a page for every product (one for each brand/model and also for each product code) as they misguidedly thought this would help them rank on Google. They also had a large four-year archive of hundreds of daily on-site searches. But each free report contained very little information and in many cases, the difference between them was less than 10 words (reports averaged 50 words in total).

Analysis of the previous 12-month activity revealed the top 20 landing pages were responsible for more than 85% of the organic sales and 60% of the traffic. In contrast, the free reports contributed to less than 4% of the conversions, and less than 8% of the traffic.

In effect, they were making Google crawl (and spend resources) on millions of low-value, auto-generated pages rather than the user-oriented pages.

The following report from OnCrawl clearly show the relationship pageviews vs. page crawled by bots:

A Supportive Team

The duplicate pages needed to be deleted from the website, as well as the Google index. Because of prior affection for the pages and business model, it was not going to be easy to convince the client of this.

However, the results achieved during the initial fixing period assured the supervisors that this was the correct plan of action.

Through the initial impact of work undertaken, I was able to win the confidence of the management and the support of the dev team.

SEO Pruning At Scale

A step-by-step guide on how to remove multiple pages from Google:

“Sometimes you make something better not by adding to it, but by taking away” (Kevin Indig)

SEO Pruning can often result in working on different scales. In this case, 99.97% of the pages were removed leaving only 1200.

Removal of unnecessary content helps to save resources (time, technical infrastructure, scraping issues, etc.), improve site quality, gain visibility and enhance your site’s interaction with Google.

Step 1: Tag The Pages

To delete your pages from the SERP (and the Google index), you need to be consistent with the signals provided in the next steps.

The first flag I used was the ‘noindex’ tag.

Be careful, and remember that this is a directive. It does not mean that Googlebot will not crawl the URL anymore; it is still in the index.

Please be mindful of: Not Disallowing any of the pages in robots.txt, as the bots cannot access the noindex directive.

Step 2: Follow The Pages With The Sitemaps

You can find out how Google identifies your pages in the “Coverage” report of the Google Search Console. Since it is important to verify that the bots are aware of all the pages, I made the dev team create a sitemap for all the pages I wanted to remove.

While removing these thousands of pages, I defined the type of content on separate files (service and product pages, info and privacy, FAQs, tags, categories, etc.). I then submitted them to the Google Search Console.

This step is important not only for Google to find the pages on the site, but to later follow the flow of the Valid pages (versus the Errors and the Excluded ones) in the “Coverage” report.

For example in the case study outlined earlier, I thought the website had 1.5M pages circa, but the sitemaps revealed that there were actually 4,860,094 of them!

Please be mindful of: The sitemap should implement as many consistent signals as possible. The following tags can be used to define how to handle the URLs:

Step 3: Review Internal Links and Value Pages

In the case study above I removed the internal links to the pages prior to deleting them, to avoid potential broken links or wasted link equity. It is critical to look at the site’s overall structure. Removing a large number of pages will change the site’s organization, and this means the internal linking strategy will require some modifications.

I checked the backlink profile, specifically for pages and folders that generated traffic or conversions, and fixed them. These URLs were redirected to the most relevant pages, improved or consolidated.

Thousands of pages can have similar characteristics, such as a folder or part of the URL. Screaming Frog can be utilized to analyze a specific pattern, and verify internal and external links.

Step 4: Create Listing and Index Pages

Now I created listing pages, with the URLs I wanted to delete. These pages will be sent to the Google Search Console later.

Each listing page should include the URLs to be deleted. To ensure verification of the process, and that Google could crawl all the URLs; I limited the listing pages to 1000 URLs circa.

As I had to handle hundreds of thousand URLs, I created multiple listing and index pages; this is because Google provides a daily quota of URLs to be fetched.

The listing and index pages should not appear in the SERP, so I applied the “noindex,follow” meta tag.

Alternatively, you can use page archives and pagination.

Example of a listing page (https://domain.com/listing-1):

Example of an index page (https://domain.com/index-1):

Upload them on your server.

Please be mindful of:

Using a standardized naming convention, so the log analysis will be easier.

Listing pages:

domain.com/listing-PageType-1

domain.com/listing-PageType-2

domain.com/listing-PageType-3

Index pages:

domain.com/index-PageType-1

domain.com/index-PageType-2

domain.com/index-PageType-3

Step 5: Delete the Pages

Prior to page deletion, I ensured that all the above steps were successfully completed.

To define the right time to cull, I checked the Coverage report (see Step 2) to ensure I was able to visualize all the pages listed in the sitemap. Lastly, I ensured I saved the pages with business value.

You do have 2 options to define the deleted pages:

Status code 404:

The server has not found anything matching the Request-URI. No indication is given of whether the condition is temporary or permanent. The 410 (Gone) status code SHOULD be used if the server knows, through some internally configurable mechanism, that an old resource is permanently unavailable and has no forwarding address. This status code is commonly used when the server does not wish to reveal exactly why the request has been refused, or when no other response is applicable.

Status code 410

The requested resource is no longer available at the server and no forwarding address is known. This condition is expected to be considered permanent. Clients with link editing capabilities SHOULD delete references to the Request-URI after user approval. If the server does not know, or has no facility to determine, whether or not the condition is permanent, the status code 404 (Not Found) SHOULD be used instead. This response is cacheable unless indicated otherwise.

The 410 response is primarily intended to assist the task of web maintenance by notifying the recipient that the resource is intentionally unavailable and that the server owners desire that remote links to that resource be removed. Such an event is common for limited-time, promotional services and for resources belonging to individuals no longer working at the server’s site. It is not necessary to mark all permanently unavailable resources as “gone” or to keep the mark for any length of time — that is left to the discretion of the server owner.

Whilst best practice is the provision of a 410 status code (gone), there are different views on how to deal with these pages. I chose Status Code 404.

Step 6: Declare the Action Just Implemented

Consistency is important. I had removed all the internal links to the deleted pages. I had consolidated the pages with business value (traffic, conversions, branding, good backlinks). I had resolved the main technical issues. It was now time to re-submit the sitemaps with the affected pages.

Googlebot should also crawl the pages included in the listing and index files – see Step 4.

I used “Fetch as Google”* in the old version of the Google Search Console, requested the indexing and chose the “Crawl this URL and its direct links” submit method.

*At the time of the reading, the old Fetch tool will no longer be available. In the new Search Console use “Request indexing” via the “URL Inspection Tool”.

Step 7: Analyse the Server Log Files

Now it is time to inspect the log files.

During the weeks following, I monitored the log files; this was to ensure Googlebot had crawled the index and listing pages, and the URLs linked to them, and that the correct 404 status code was returned.

Please be mindful that:

As this is so critical, try to ensure that every step is as easy as possible to avoid errors.

Split the pages into your sitemaps – and the listing pages – by content type (many CMS allow this).

If you work with your dev team, group the URLs by a template, type or a common factor/pattern, such as extension (page123.ext), folder (/report/), number (product-237), etc.

Step 8: Follow the Sitemap

The sitemaps(s) created in Step 2 helped me to follow the decrease of Valid (as well as Error and Excluded) pages and the visibility trend.

Step 9: Delete Sitemap, Index and Listing Files

Once all the changes you applied have taken effect, you can delete the sitemaps and listing files. Only cull these files only after the pages disappeared from the index. This can take a few months and can be affected by multiple variables. Do not rush this and keep monitoring your site.

In my case the number of pages was very high, and it took me 4 months delete all the files.

My team and I provided all the possible consistent signals to Google.Only 2 weeks later – and with 0.02% of the original pages – we started to see a clear trend in terms of enhanced organic visibility and traffic, which still continues today.

What Should You Expect

You should see the start of a trend. Listed below are the main expected changes:

Increase of traffic/visibility

An improved site in terms of crawled URLs. It’s now time to improve other aspects, such as content, ranking, conversion rate, brand, etc.

Slight decrease of traffic/visibility

This is to be expected, so plan for a small drop.

It should not largely affect your conversions.

Follow your overall SEO plan, and improve content accordingly (should be part of your improvement plan).

Same traffic/visibility

No real change in terms of visibility or traffic is a positive outcome as it highlights that the deleted pages meaning didn’t bring value.

This just means the overall health of the site has been improved.

In Conclusion

Deletion of low-value pages should be part of an overall SEO audit and undertaken with a structured plan for business improvements. After removal of the low-value content, every remaining page should have defined business goals: conversion, branding, support, traffic acquisition, etc.

Always ask the website owner for advice and feedback.

This marketing news is not the copyright of Scott.Services – please click here to see the original source of this article. Author:

For more SEO, PPC, internet marketing news please check out https://news.scott.services

Why not check out our SEO, PPC marketing services at https://www.scott.services

We’re also on:

https://www.facebook.com/scottdotservices/

https://twitter.com/scottdsmith

https://plus.google.com/112865305341039147737

The post How to Bulk Remove Thousand of URLs in Googles Index appeared first on Scott.Services Online Marketing News.

source

https://news.scott.services/how-to-bulk-remove-thousand-of-urls-in-googles-index/